NEWS |

I took Apple Visual Intelligence to an art gallery to act as my tour guide — here’s how it did

Apple Visual Intelligence provides iPhone 16 owners with a convenient and versatile tool for learning more about the world around them. The demos from Apple during September’s iPhone 16 launch event showed this off with examples of identifying a dog’s breed or finding out about a concert from a poster — experiences similar to my initial tests using the iOS 18.2 beta. But I decided to look elsewhere for a more rigorous test of Visual Intelligence.

If Visual Intelligence is meant to tell you about unfamiliar things in front of you, providing the context you wouldn’t necessarily get otherwise, then trying it out on some paintings seemed an ideal application. Paintings are a visual medium with all sorts of angles to examine them from, but unless you have a degree in fine arts, it can be hard to approach. With a recent visit to London’s Tate Britain gallery, I tried to change that.

My mission was to find and experience a particular exhibition at the gallery, with the hope that Visual Intelligence would help make the trip an easy but enlightening day out. While art like this isn’t to everyone’s taste, it’s something I and many others want to know more about. And perhaps that’s something Apple Intelligence can help with.

Table of Contents

Reading a map with Visual Intelligence

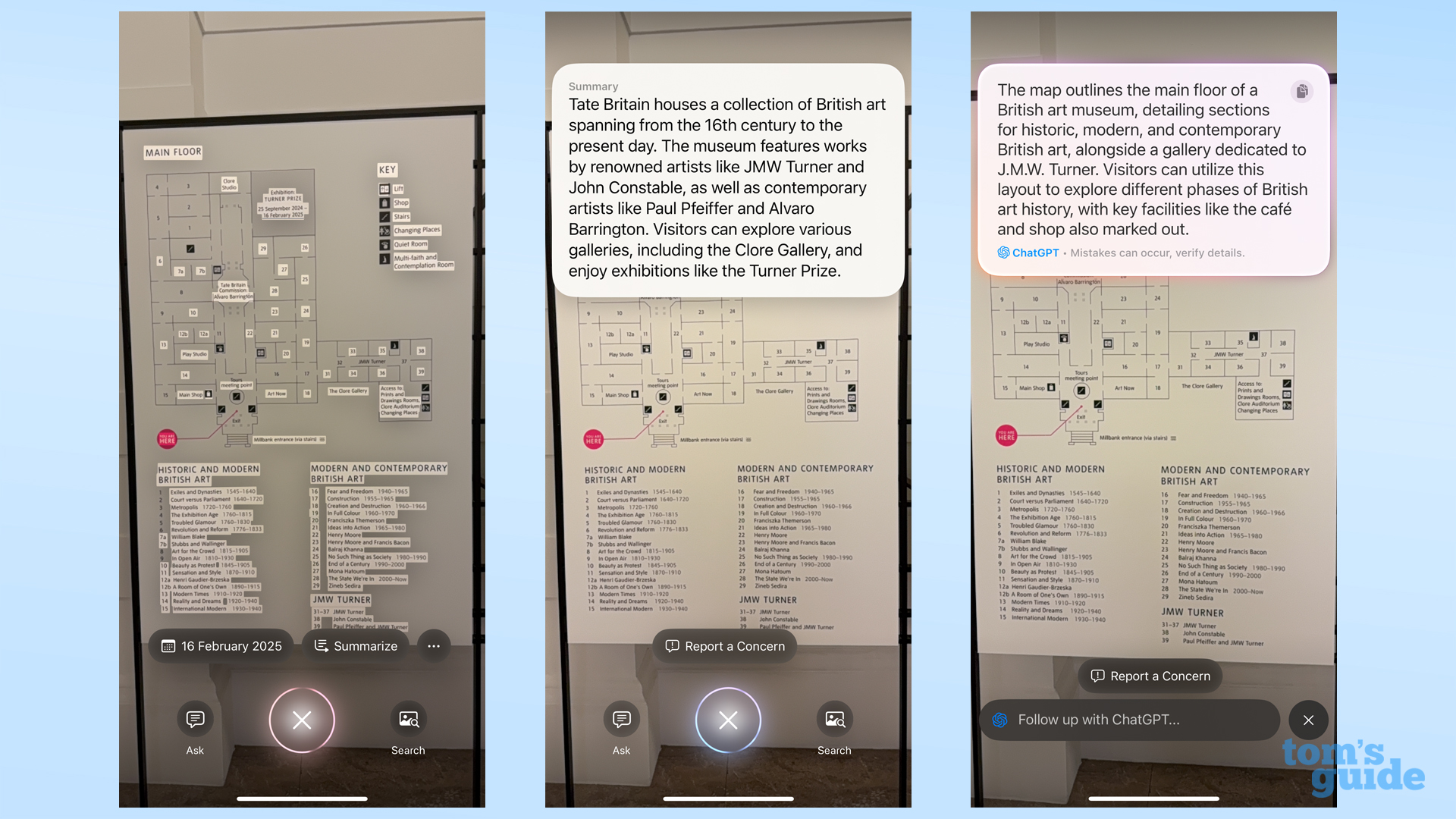

Upon entering the Tate Britain, I did the obvious thing and looked for a map to direct me to the artist I wanted to check out — British Romantic painter J.M.W. Turner. Fortunately, this map was easy to read and decipher by myself, but I pointed my iPhone at it all the same. If this map was in another language or alphabet, then I’d absolutely need help reading it, and that’s where I hoped Visual Intelligence could come in.

Luckily for me, this map was oriented vertically, matching the portrait view that Visual Intelligence expects. After taking a snap, the iPhone quickly identified most (but not all) of the text on the sign. From here I had two practical options — get Apple Intelligence to explain the sign to me itself, or pass it on to ChatGPT with the “Ask” button.

Apple Intelligence offered the option to summarize the text, as well as pulling out the date 16 February 2025 (the end date of a temporary exhibition). The summary was able to tell me the name of the gallery, some of the notable artists within, and a couple of the spaces I could visit. All useful things to know, but it wouldn’t help me get to the Turner paintings.

So I then tried ChatGPT. Weirdly, it was unable to tell me where I was exactly, only that it was a British art museum, and a map. Fortunately I could ask follow-up questions, so I asked where I might find the Turner exhibit. ChatGPT was able to indicate the paintings were at the bottom right of the map, but it misread the room numbers, as well as not including all of the rooms that were part of the exhibit. Not a big error, but one that could have caused confusion had I not been able to read the map in the first place.

Identifying the artist with Visual Intelligence

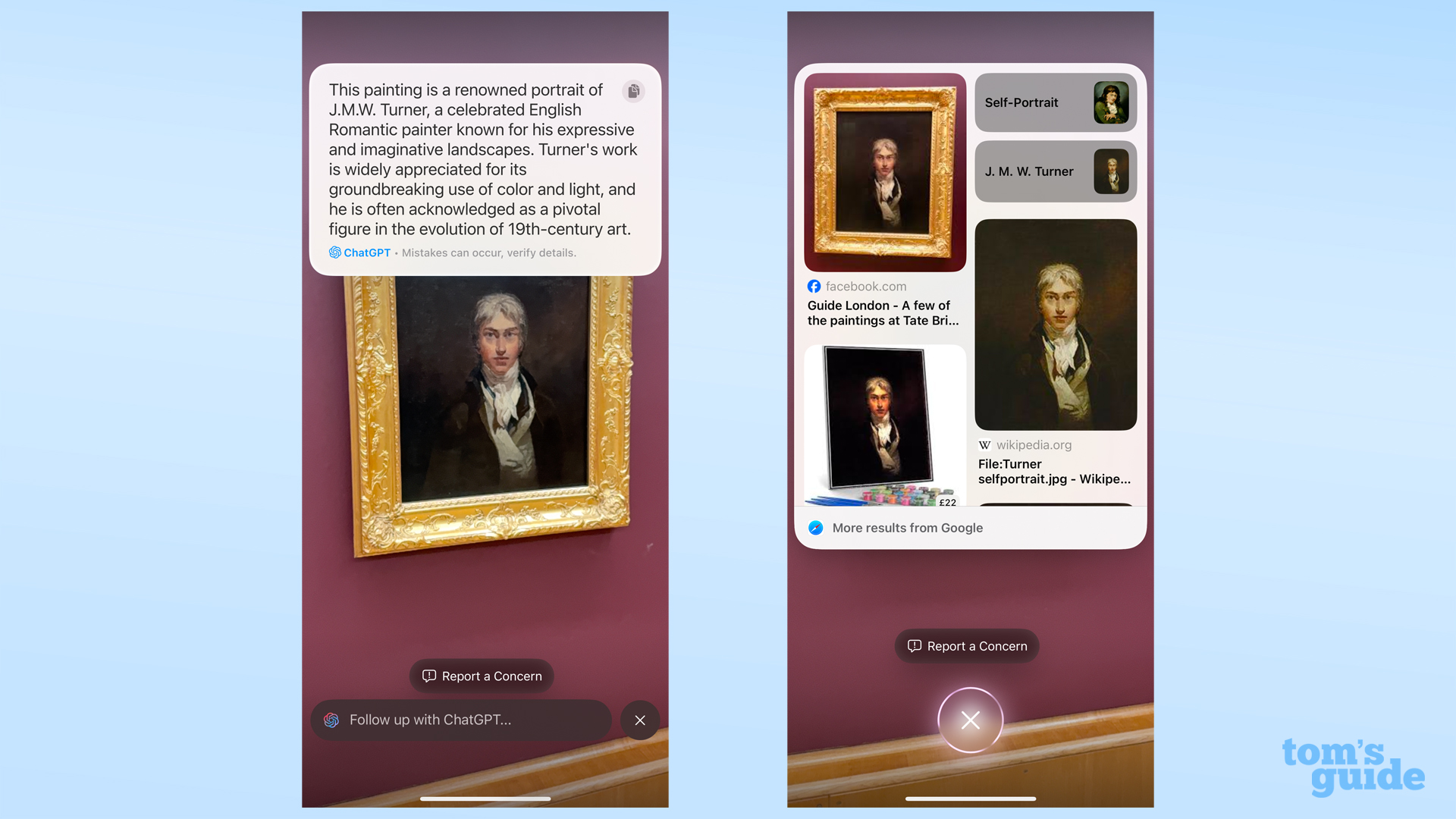

Entering the exhibit, you come across one of the most famous portraits of Turner — a prime target for Visual Intelligence. ChatGPT ID-ed him straight away, and gave some relevant context.

I also tried the Search function (effectively a shortcut to Google Lens), which worked well, too, showing me results for that exact image online. Tapping one of these results opens a pop-up browser over the Visual Intelligence interface, which works okay, but I’d have preferred things to stay within Visual Intelligence or take me straight to my full browser app as opposed to this half-measure solution.

Visual Intelligence zooming limitations

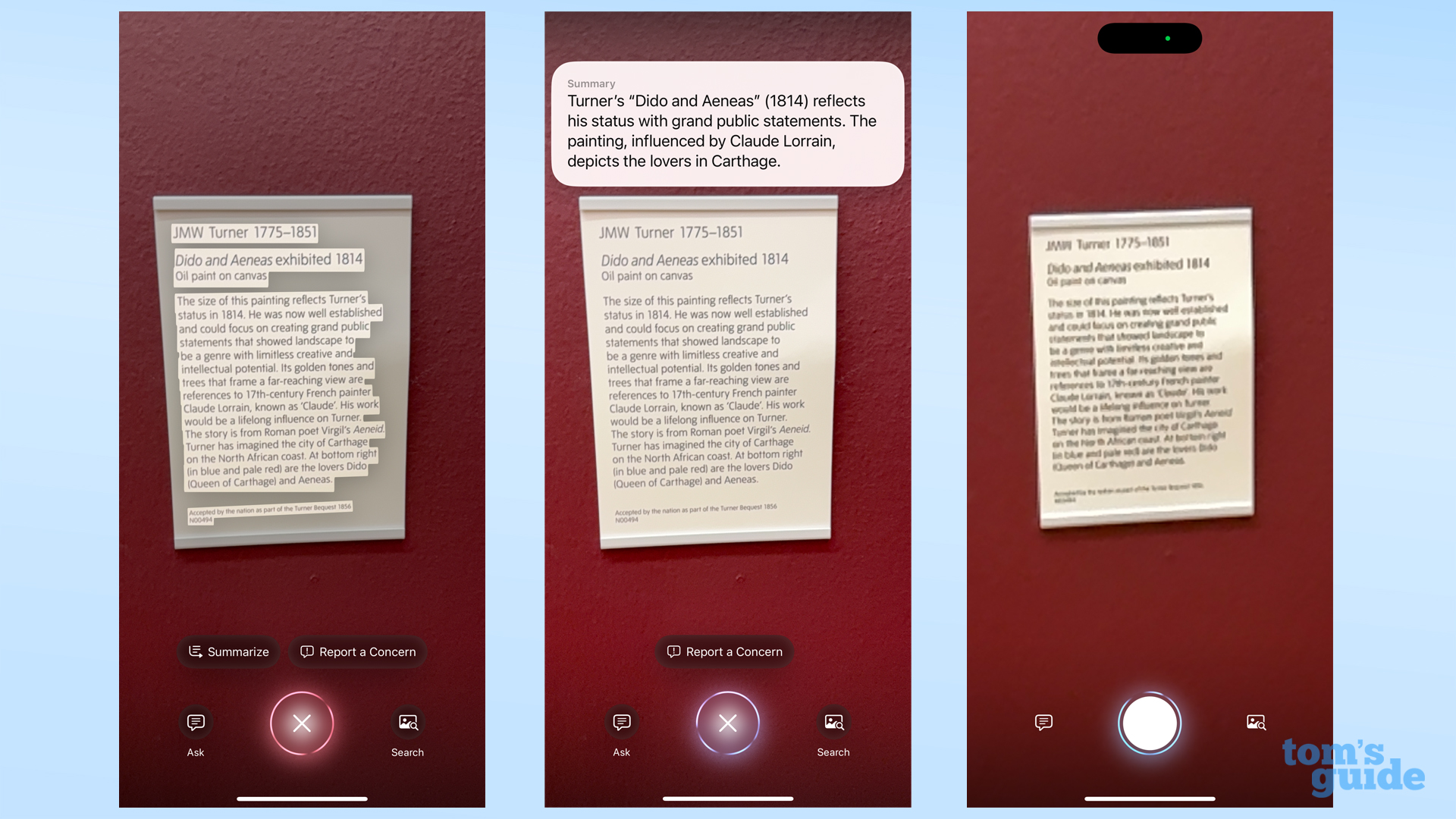

A criticism I made of Apple Visual Intelligence the first time I tried it was that it can only zoom in digitally. Despite the iPhone 16 Pro Max I was carrying having an excellent 5x telephoto camera on it, it wasn’t supported in Visual Intelligence. And that fact became extra annoying in the Tate Britain.

The signs next to each painting are easy to read from a distance, but if you try zooming in to get the whole panel in Visual Intelligence’s viewfinder, you end up with a blurry mess.

I had to stand right up against the wall to get a clear image of the sign that Visual Intelligence could summarize, which it did well, albeit with too little detail for my liking. But had there been a barrier in between me and the wall or an anxious security guard wondering why I wanted to get so close to these 200-year-old masterpieces, this would have been a problem.

Fact-checking with Visual Intelligence

One of the most famous Turner paintings in this gallery is Norham Castle, Sunrise, a stunning, near-abstract depiction of a castle in the north east of England. On a nearby sign, I learned that this was based on a print Turner originally made for a book, rather than painted from scratch. This seemed an interesting fact, so I checked to see if Visual Intelligence could tell me about it.

The Search function wasn’t much help, only providing image results with no option to refine the query. Meanwhile, ChatGPT took two prompts to correctly ID the painting, then told me about the castle it’s based on when I asked.

Realizing my question was probably too vague in this instance, I then asked more clearly if this painting was based on a book, at which point ChatGPT explained the relationship between the print and the painting. I got where I needed to in the end, but only because I knew what the end goal was at the start.

Recognizing lesser-known works with Visual Intelligence

Although the Google search results aren’t as versatile as what you can get from ChatGPT, I found it was 100% accurate unlike the chatbot. For instance, looking at a lesser-known work by Turner, Ploughing up Turnips, near Slough, the Search function correctly found matching results online. When I used the Ask button in ChatGPT, it misidentified the artist and then the painting even after I told it that this was a Turner piece.

It shows off a big limitation to ChatGPT integration with Apple Intelligence — every chat is an entirely separate interaction. If you do something similar via the dedicated ChatGPT app, it can keep your prior messages in mind when answering further questions. But for regular iPhone 16 users who want to try Visual Intelligence out without signing up for anything extra, this means you’re starting from scratch with each new image you take, requiring you to explain stuff to the phone over and over again.

Sharing stories with Visual Intelligence

My favorite painting hanging in this gallery is Regulus, not only because it looks incredible, but because of the legend surrounding it — that Turner accidentally stabbed through the canvas while painting the sun because he was trying to make it so blindingly white.

This is exactly the kind of story you’d hope Visual Intelligence could tell you about a painting, so I asked it to tell me about the painting. After a false start where it mistook the setting of the painting for Venice rather than Carthage, I eventually got the story I wanted by asking specifically about damage to the painting during its creation. Asking for general trivia or more generally about damage didn’t get me the story I wanted.

Explaining relationships with Visual Intelligence

One last test I gave Visual Intelligence was to explain why paintings by another artist — John Constable — were hanging in the same part of the museum. Google identified the painting straight away, but the links only showed results about that painting, which wouldn’t help me with my question as to why it was hanging here in particular.

Once again, ChatGPT took an extra prompt to recognize the painting and its creator, but it was able to explain the temporal and stylistic link between Turner and Constable, giving basically the same explanation as the gallery gave on a sign at the entrance to that room.

A picture’s worth a thousand prompts

My time trying Visual Intelligence at the Tate Britain has shown me that the three main components that make up the feature — the way Visual Intelligence itself captures information, plus the Google and ChatGPT-powered cores that provide the actual content — have quite different levels of competency. But together they can offer a reasonable amount of generally accurate information, less like having a museum curator in your pocket and more like a know-it-all uncle who tends to misremember that art class he took in college one time until you correct him.

First off, Visual Intelligence itself has proved itself convenient to use and capable of helping without any other services via its text recognition, summary abilities and its ability to integrate with other Apple apps. But it really needs to work with optical zoom cameras where available for moments where you can’t get close to your subject. It would benefit from offering more and wider options for how to explore an image beyond the current two services it connects to as well.

I feel that the Google search results are the most reliable of the Visual Intelligence package, but it’s also the least integrated. The fact the results are often only images can be a hindrance, and weird given a regular Google search in a browser is more than happy to provide an AI summary or featured text excerpt to tell you about what you’re looking for.

Lastly we have ChatGPT, which would benefit from having a larger context window to explain more things over several shots, rather than needing prompting over and over again. Maybe that can’t happen in order to balance ChatGPT’s server costs against Apple offering its services to iPhone 16 users for free, but it’s on my wishlist all the same. Greater accuracy, or perhaps the option to add a written prompt along with an image when you first ask about it, could also help focus the results to what you need more quickly.

As a tool for learning, Visual Intelligence has shown it has a lot of potential, and if Apple can build on this potential with future iOS updates and hardware generations, iPhone owners may have one of the best educational tools. But right now, and perhaps for a long time to come, it’s still quicker to look for a sign or ask a nearby expert to get an accurate rundown on a painting, rather than prodding ChatGPT over and over again to get the artist’s name right.

More from Tom’s Guide

Related Posts

- Forget all other Galaxy S25 rumors — this one feature will make or break Samsung’s next flagship

- PSA: You can score a free Google Pixel 8a when buying the Pixel 9 Pro or Pro XL — here’s how

- Ugreen 10,000mAh Magnetic Wireless Power Bank review: capacious and light, but not built like the best

- iPhone 14 Plus camera issues? Apple may fix it for free — Here’s what you need to know

- Google Pixel 9 Pro Fold vs OnePlus Open: two of today’s most desirable foldables